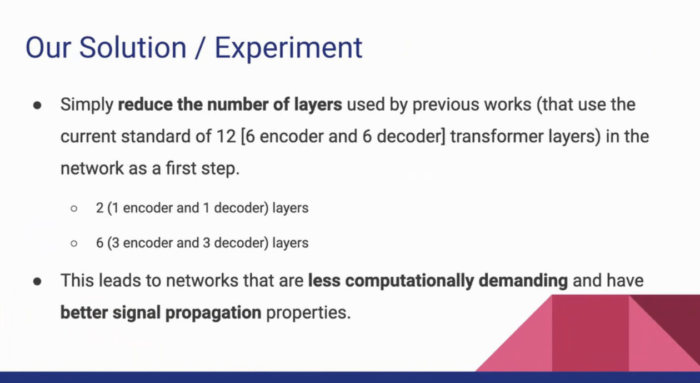

In his presentation, Elan says that out of the box transformer hyper-parameter configurations are ill-suited for low resource tasks. This results to underperforming networks that have higher computational demand.

Learn more about his team’s solution to this problem: On optimal transformer depth for low-resource language translation by Arnu Pretorius, Elan van Biljon, Julia Kreutzer · Apr 26, 2020 · ICLR 2020

This talk was delivered in Addis Ababa, Ethiopia at the ICLR 2020 “AfricaNLP Workshop – Putting Africa on the NLP Map” via the AI4D Africa program with support from IDRC, Sida and Knowledge 4 All Foundation