The aim of our project is to investigate the technological feasibility of deploying Unmanned Ground Vehicles for automated wildlife patrol, as well as performing a preliminary analysis of other metadata collected from officials at a national park in Kenya. To this end, we seek to collect and publish a dataset of driving data across national park trails in Kenya, the first of its kind, and use deep learning to predict steering wheel angle when driving on these trails.

Setting up the data acquisition system

The data collection required a vehicle mounted with a camera to be driven across national park trails while recording the trail video as well as key driving signals such as steering wheel angle, speed and brake and accelerator pedal positions. We began design, installation and configuration of the data collection system in November and December 2019.

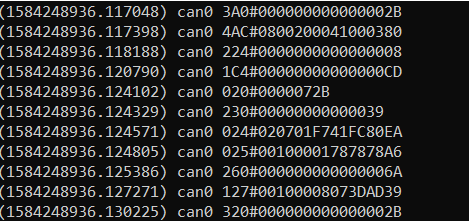

The first idea was to procure and attach sensors to the vehicle to obtain these driving signals. But upon further research, it was discovered that most of these driving signals can be read from the CAN bus which is exposed on the OBD-II (On-Board Diagnostics) port on most vehicles manufactured after 2008.

This information however is grouped and encoded within different parameter ids, and it requires reverse engineering to identify each of these driving parameters which is significantly time consuming, an activity that would take months by itself.

Furthermore, not all of the driving signals would be exposed on the CAN bus. The parameters exposed on the bus vary between vehicle manufacturers and models, and so does the encoding. After failing to understand the data read from the CAN bus of our personal vehicles, we decided to find a vehicle model which had already been reverse-engineered.

We were able to identify [1] and procure a Toyota Prius 2012 for the data collection, from which we could read the steering wheel angle, steering wheel torque, vehicle speed, individual wheel speeds and brake and accelerator pedal positions. We used a Raspberry Pi 3 microcomputer with the PiCan hat to read and log the driving signals.

In order to create the dataset for training and testing the learning algorithm, each data sample would have to contain a video frame matched to the corresponding driving signals at that instance. That means all the video frames, as well as the driving signals, have to be timestamped.

The driving signals are automatically timestamped during logging on the Raspberry Pi, but most cameras don’t timestamp the individual frames. Further, the internal clock of the camera would not be in sync with that of the RPi’s, and would cause the video frames and driving signals to also be out of sync when creating the data samples.

That means a camera that could interface to the computer as a webcam would be needed, so each frame can be read and timestamped before being written to the video file. Driving on rough national park trails would also induce a lot of vibrations and require a camera with good stabilization. These were some of the challenges in selecting a camera for recording the driving video.

Check the project documentation on Github

We settled on the Apeman A80 action camera which has gyro stabilization, HD video recording and can also function as a webcam. OpenCV was used to read and record timestamped video to the computer.

Initially, we tried to connect the camera to the Raspberry Pi itself. But the RPi is a low-powered microcomputer. There was significant lag in recording and could not write the video higher than a frame rate of 8fps. We therefore decided to use a laptop which could comfortably record HD

video at 30fps to connect to the camera, and the RPi for only logging the driving signals from the vehicle’s CAN bus.

This however presented a different challenge of being limited by the laptop battery. While the RPi can be charged using a portable power bank or directly from the car’s charging port, the laptop cannot. That meant significantly shorter data collection runs. We could only drive around continuously for 2 hours before we had to return to charge the laptop which took another 2 hours.

This forced revising down our overall data collection projections from 50 hours to 20 hours, of which 25 hours which was to be on the national park trails was revised down to 10 hours, and the other 10 hours on a mixture of tarmac roads and other rural dirt roads.

There was also extensive testing of different video encoding methods to determine the best filesize versus quality tradeoff, as well as data collection code optimization to ensure minimum lag during the data logging.

Data collection

We began the data collection in January 2020 on tarmac and rural dirt roads. The idea behind this was to train the algorithm on a simpler dataset and then use transfer learning for better faster results on the national park trails. The data was collected at various times of the day: early in the morning, noon and late in the evening in order to get a varied dataset in different lighting conditions.

While we were able to smoothly collect the data on tarmac roads, driving over the rural dirt roads proved impossible as they were marked with potholes. Not only was it challenging to drive a low-body vehicle over the rough terrain, but the constant maneuvers made to go around the potholes meant that most of that data would be unusable as it would present a different challenge altogether in training.

The challenge of driving a low-body vehicle on dirt roads also limited our choices of national parks, as we had to carefully select ones with smooth driving trails. Our plan to collect data from the Maasai Mara National Reserve had to be abandoned due to the bad road conditions there, and we opted to collect data from Nairobi National Park (8 hrs) and Ruma National Park (2.5 hrs) instead. Even these however were not without their setbacks involving a flat tire and bumper damage.

Another challenge faced in the parks was internet connectivity. While a stable internet connection was not needed for the data collection which was done offline, a connection to the internet was needed when starting up the Raspberry Pi to allow it to initialize the correct datetime value.

This is because the RPi microcomputer does not have an internal clock. That means unless it has a connection to the internet, it will resume the clock from the last saved time before it was shut down, hence ending up showing the wrong time. That resulted in incorrect timestamps on the logged driving data that could not be matched to the video timestamps.

This was observed while analyzing the driving data logs from one of the runs at Ruma National Park. Luckily, internet connectivity was regained towards the end of the run and the rest of the timestamps could be calculated correctly using the message baud rates.

Other minor issues faced in obtaining good quality data involved keeping the windshield clean while driving on dusty park trails where one is not allowed to alight from the vehicle, and securely mounting the camera inside the vehicle while driving over rough terrain.

Dataset preparation and Training

A significant portion of the data collected included driving around potholes, overtaking, stopping, U-turns etc. which would not be useful for predicting the steering wheel angle within the scope of this study. All these segments had to be visually identified and removed before

preparing the dataset.

Initially, we proposed to use a simple Convolutional Neural Network (CNN) model for training as in [2], where the steering wheel angle is predicted independently on each video frame as the input. However, the steering angle is also largely dependent on the speed of the vehicle. Driving is also a stateful process, where the current steering wheel angle is also dependent on the previous wheel position.

We therefore investigated the use of a more sophisticated temporal CNN model as in [3] using recurrent units such as LSTM and Conv-LSTM that could give more promising results. The above model however is very computationally expensive and would require a cluster of very expensive GPUs and still take days to train.

Using this model proved impossible to achieve within the given timeline and budget. We therefore decided to continue with our initial proposal using a static CNN model [2].

Currently we are in the process of building the dataset and learning model for the project. We are also working on preparing a preliminary analysis on the feasibility of automated wildlife patrol [4] based on other metadata collected from park officials.

We are grateful for the immense support that we always get from our mentor Billy Okal who in spite of his busy schedule, gets the time to set up calls whenever we need to consult and always comes up with great ideas that address most of our concerns.

References

[1] C. Miller and C. Valasek, Adventures in Automotive Networks and Control Units, IOActive

Inc., 2014, pp. 92-97.

[2] M. Bojarski et al., End to end learning for self-driving cars, 2016, arXiv:1604.07316.

[3] L. Chi and Y. Mu, Deep steering: Learning end-to-end driving model from spatial and

temporal visual cues, 2017, arXiv:1708.03798.

[4] L. Aksoy et al., Operational Feasibility Study of Autonomous Vehicles, Turkey International

Logistics and Supply Chain Congress, 2016.